Training AI agents

with reinforcement

learning

is easy

Build. Train. Ship.

Get started fine-tuning AI Agents with LoRA in less than 20 lines of code. Or just ask Claude Code

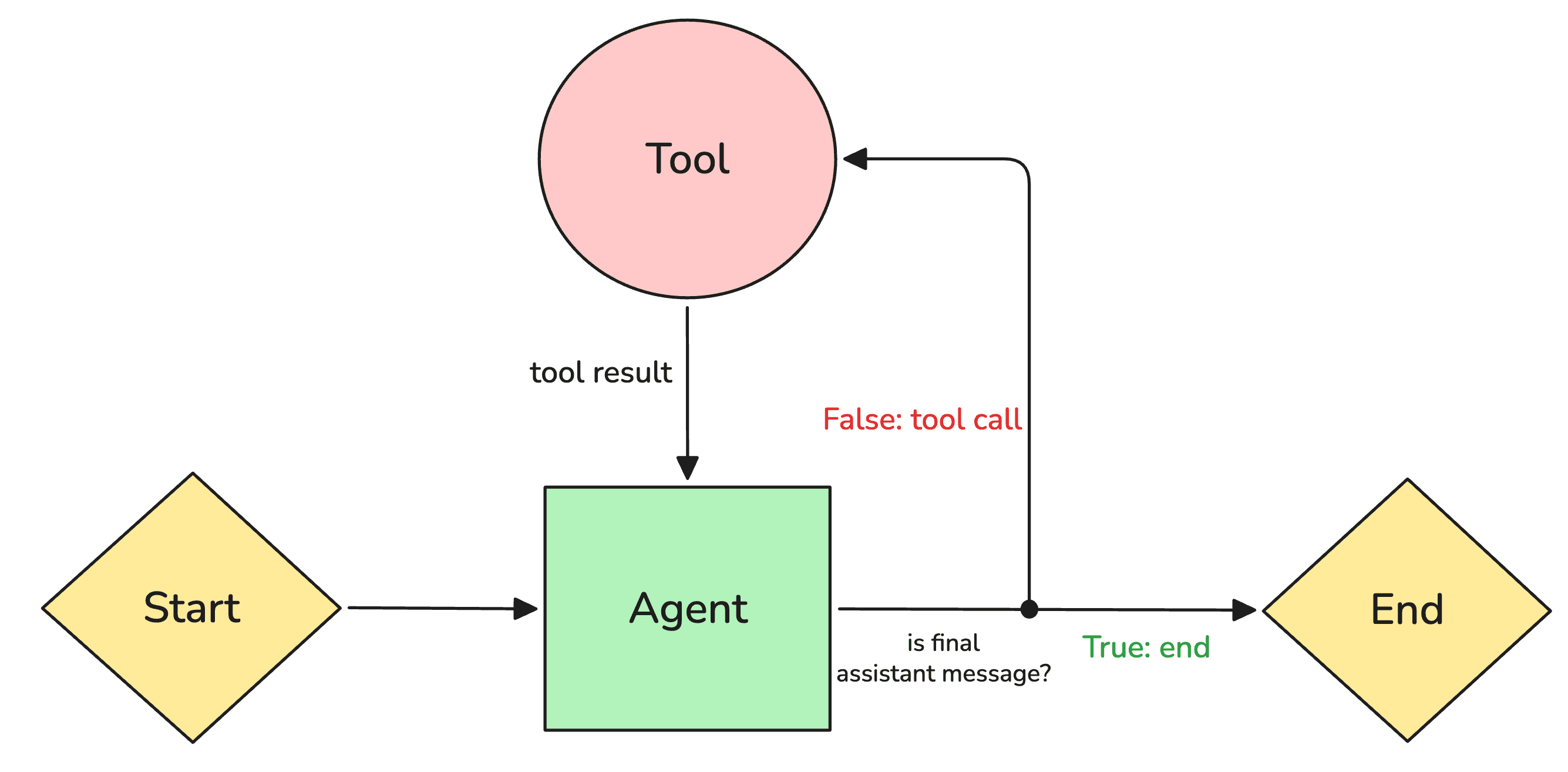

How it works

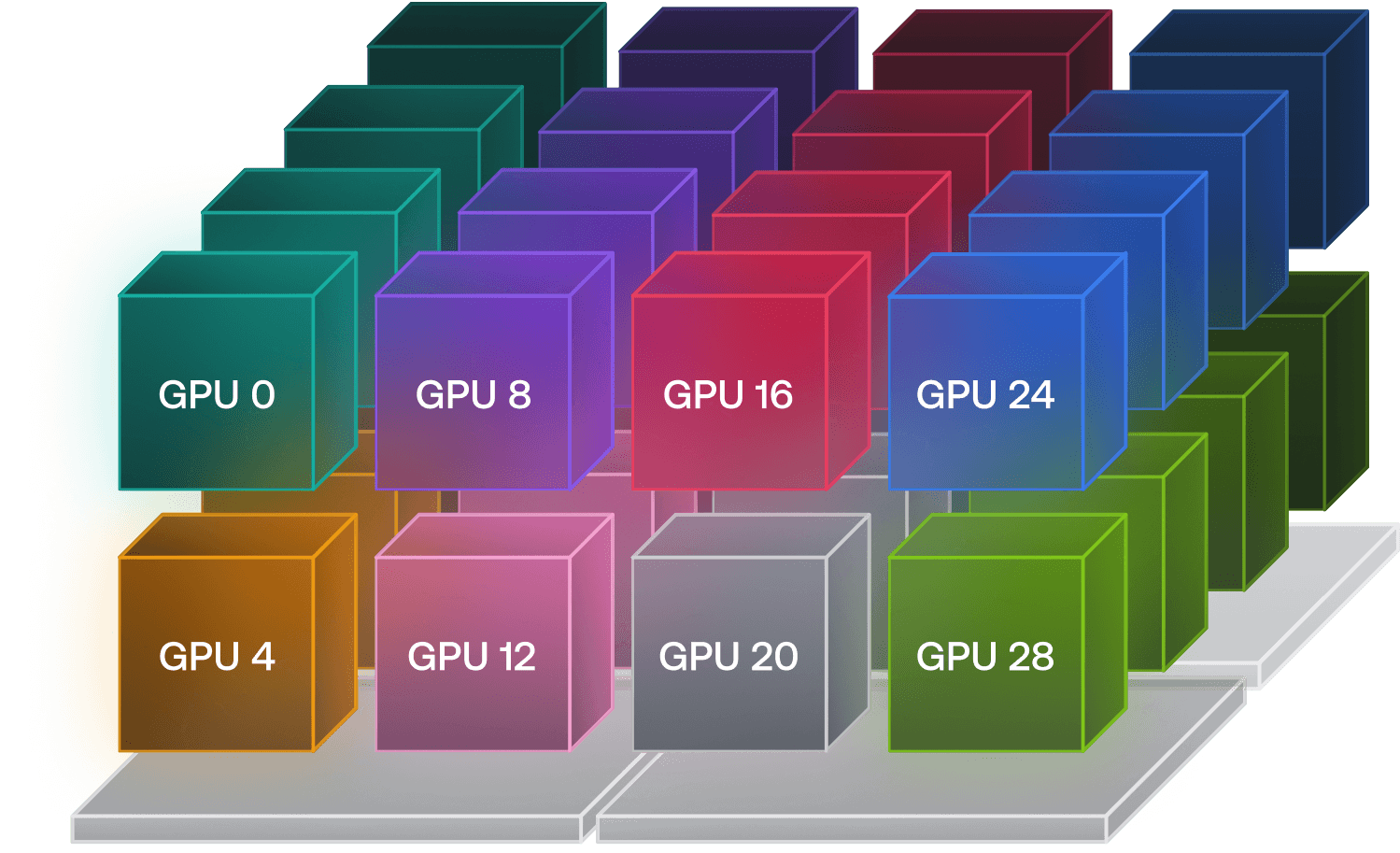

Train Efficiently

Fastest Training,

Cheapest Compute

Achieve maximum throughput for LLM finetuning with LoRA and significantly reduce compute costs.

Tokens per second

Open Source Support

Wide Model Support

Support for the best open source models like Qwen,

DeepSeek and GPT-OSS.

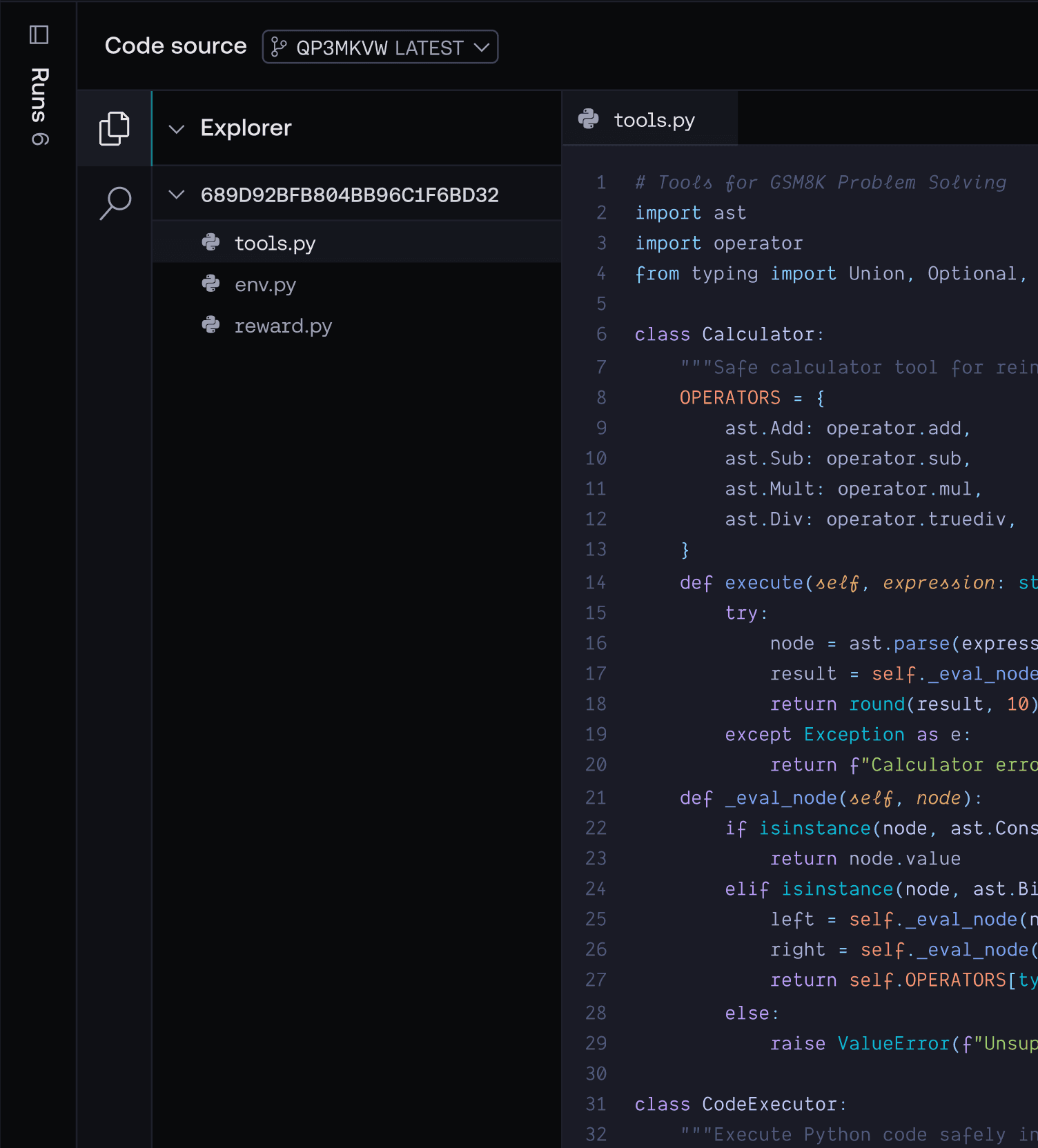

Agent Training Observability

Advanced Telemetry

Intelligent telemetry to evaluate, monitor

and iterate on AI Agent LLM applications.

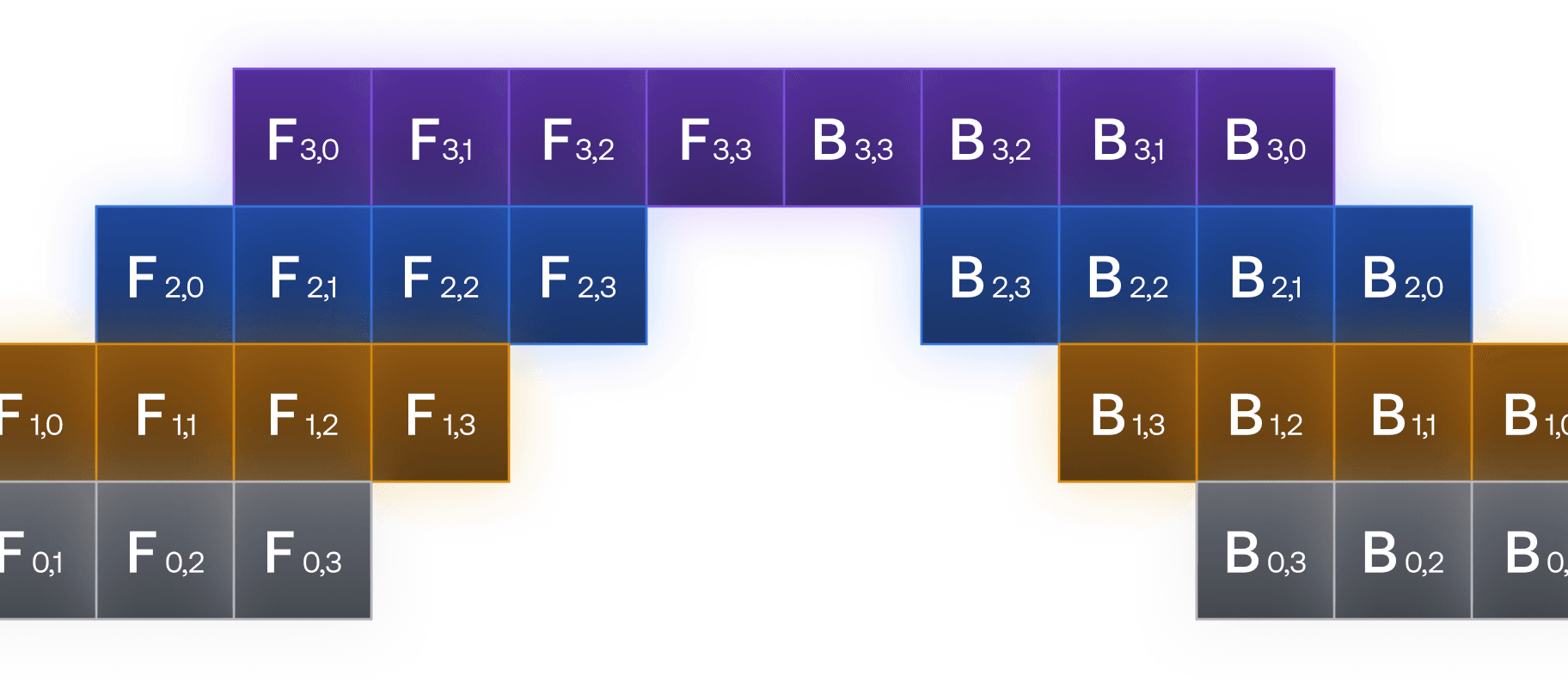

Multi turn Intelligence

Long Horizon Tasks

Train on 32k to 1 million size context without degradation.

Build vertical agents for multi-turn and long-running tasks.

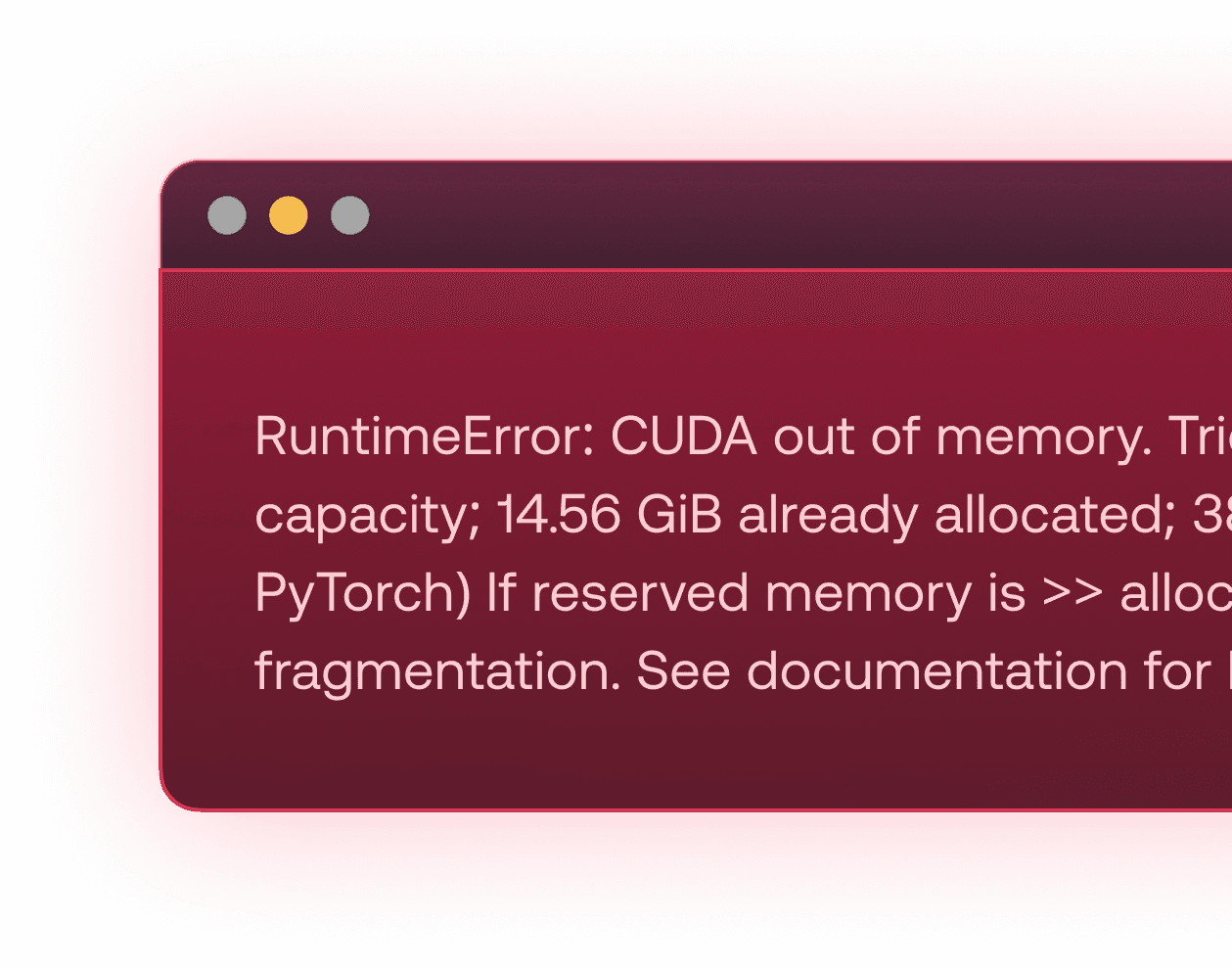

Predictable Performance

Focus on finetuning your AI agents

instead of dealing with:

OOM errors

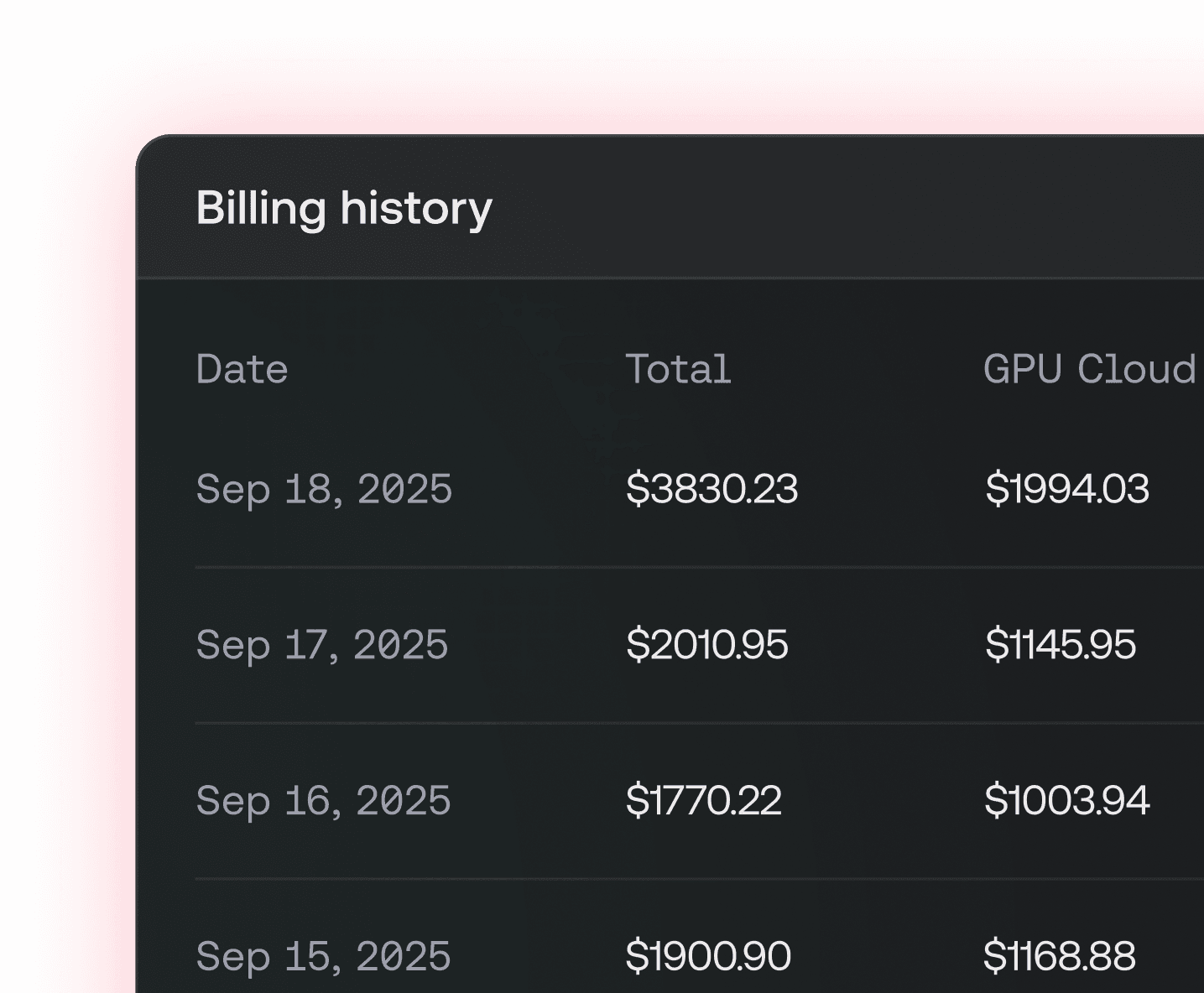

Hefty debug bills

GPU infrastructure

Performance optimizations

Start finetuning language models in three easy steps.

1.

Set up your

environment

2.

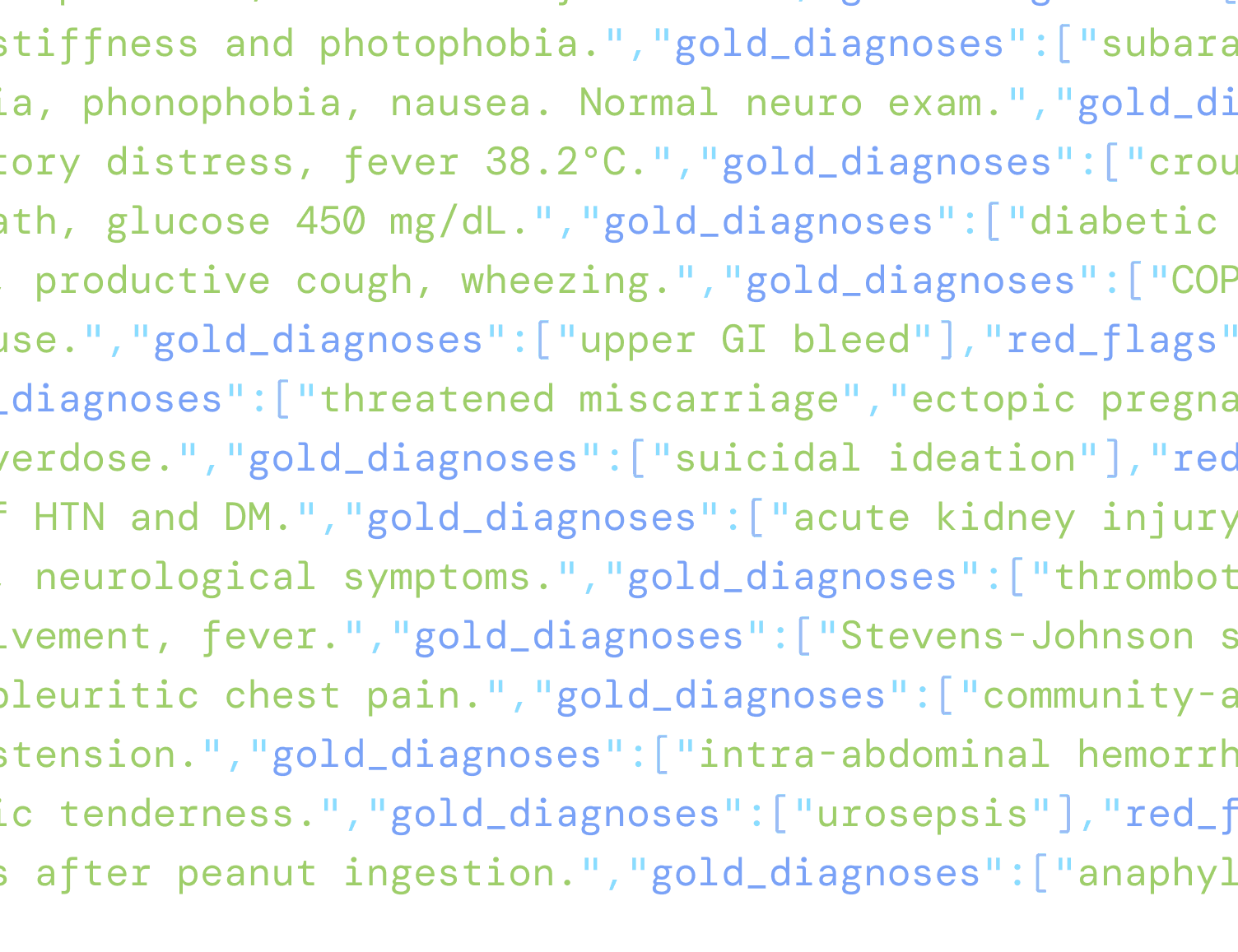

Add your data

in JSONL

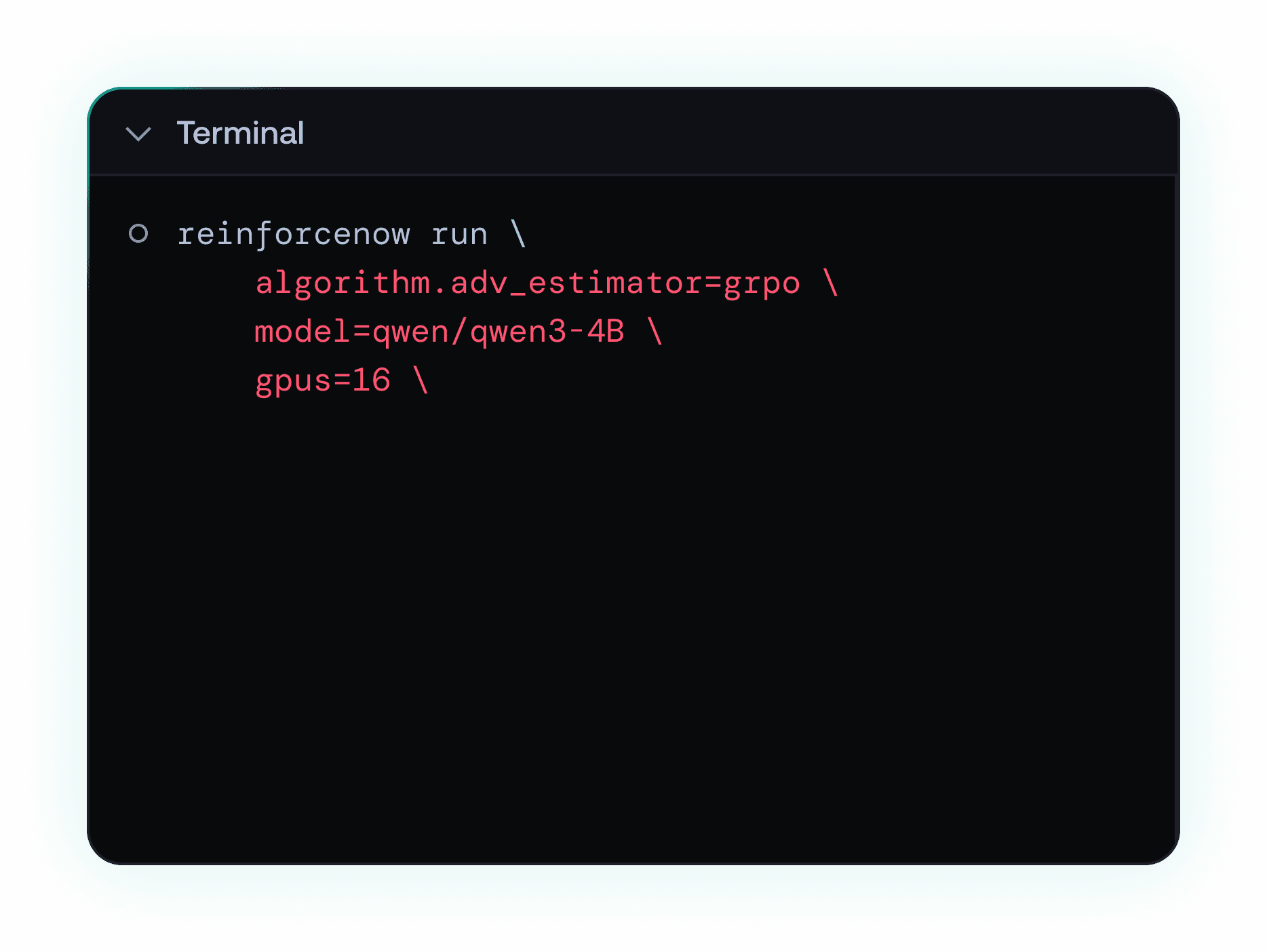

3.

Press Enter

FAQ

More details you might want to know:

Our AI agent development platform manages the entire RL infrastructure and helps you quickly iterate on RL experiments, so you don’t waste valuable time setting it up.

You can focus on building your agent, collecting data, and then running training using your CLI.